The first spark changed everything.

Picture that moment—perhaps 400,000 years ago—when a human first captured lightning’s gift or coaxed flame from friction. The acrid smoke stung their eyes, but the warmth that spread through their fingers promised something unprecedented. In that instant, the world split into before and after.

The person holding that dancing light couldn’t have imagined what they’d unleashed: the ability to cook food that would expand brain capacity, forge metals that would build civilizations, or power engines that would carry humans to the moon.

But they also couldn’t foresee the destructive potential they’d awakened. That same flame would consume forests, level cities, and become humanity’s weapon against itself.

The Early Adopters and the Burned Villages

In those early days, some tribes embraced fire immediately. They learned to contain it, feed it, and direct its power. The smell of cooked meat filled their caves. Flickering light extended their days past sunset. Predators retreated from the glow that meant humans were near.

These early adopters gained enormous advantages: better nutrition, safer settlements, expanded capabilities. They thrived while others struggled with raw meat and huddled in winter darkness.

Yet fire demanded respect. Those who rushed to use it without understanding its nature paid dearly.

Imagine the first village that burned. The family who thought they’d mastered fire, only to wake to flames consuming everything they’d built. The children who learned too late that this beautiful, dancing light could steal their breath and scar their skin.

Wars were fought with fire as a weapon, turning human creativity into human destruction.

The tribes that prospered weren’t necessarily the ones who discovered fire first—they were the ones who learned to harness it systematically, with proper foundations and safeguards.

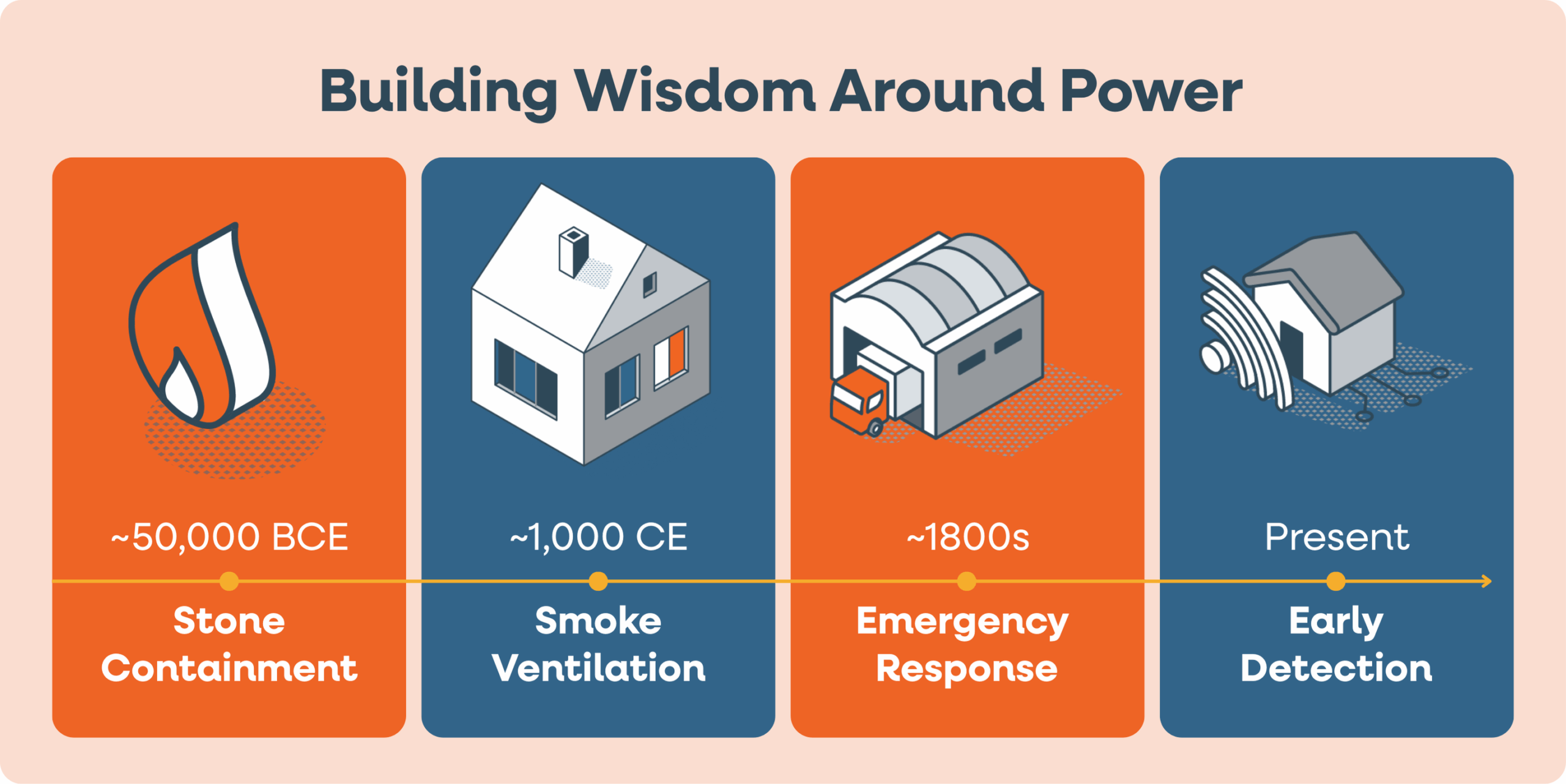

Building Wisdom Around Power

Over generations, humanity developed a sophisticated relationship with fire. We built stone hearths that couldn’t burn. We designed chimneys that carried smoke safely away. When London burned in 1666, we didn’t abandon fire; we created the first organized fire brigades.

Today, every city has professional fire departments with specialized equipment, rigorous training, and coordinated emergency response systems that can mobilize in minutes. We established safety protocols born from tragedy and loss.

Most importantly, we taught our children. “Don’t play with fire” became fundamental wisdom passed from parent to child. Fire exits became standard. Fire drills became routine. We embedded respect for fire’s dual nature into our very culture, its incredible power to create and its equal power to destroy.

This cultural evolution didn’t happen overnight. It took centuries of trial and error, of burned fingers and burned villages, of innovation and hard-learned lessons. But eventually, humanity didn’t just use fire—we mastered it. We learned to channel its power responsibly while guarding against its capacity for destruction. Fire became the foundation of modern civilization, but only because we learned to respect its power and implement proper safeguards.

For thousands of years, we believed fire was humanity’s most transformative discovery.

We were wrong.

Today’s Pivotal Moment

Today, we stand at another pivotal moment.

We have just discovered fire again. Except this time, we call it artificial intelligence.

Like that first flame, AI promises to transform everything. It can amplify human capability in ways we’re only beginning to understand. Early adopters are already seeing remarkable advantages; insights that required armies of analysts now flow from algorithms, strategies that demanded years of experience now crystallize in moments, tasks that consumed entire teams now complete automatically.

But here’s what should terrify us: Just as with fire, AI carries profound risks that mirror humanity’s earliest struggles with uncontrolled power.

The New Burned Villages

Right now, as you read this, AI systems are being weaponized in ways that would make our fire-wielding ancestors shudder.

Imagine receiving a phone call from your bank—except it’s not your bank, it’s an AI that’s learned to perfectly mimic your banker’s voice, asking for your account details. Picture watching a video of a political candidate saying something outrageous—except they never said it; an AI created a deepfake so convincing that you literally cannot believe your own eyes and ears.

Consider Sarah, who received a frantic video call from her daughter asking for emergency money. The voice was perfect. The face was familiar. The fear seemed real. Sarah wired $3,000 before discovering she’d been talking to an AI deepfake; her daughter was safe at school, unaware that her identity had been stolen and weaponized.

Bad actors are already using AI to clone voices for deception, generate fake identities for fraud, and create convincing misinformation that manipulates elections and divides communities. AI tools can be exploited to launch sophisticated cyberattacks, steal personal identities, and compromise privacy and security on unprecedented scales.

The Threat to Truth Itself

More insidiously, AI’s ability to analyze vast amounts of personal data enables new forms of social manipulation. Right now, AI systems can deliver personalized propaganda designed specifically to influence your opinions, creating “information bubbles” that isolate you from alternative perspectives while you remain completely unaware it’s happening.

How would you know if the next compelling article you share was crafted by an AI specifically to manipulate your emotions and shape your political views?

The World Economic Forum identified AI-driven misinformation and disinformation as the most severe short-term risk facing humanity in 2024, and it remains the second-highest short-term risk in 2025—more immediate than climate change, more urgent than economic collapse. We risk creating “social feedback loops that undermine any sense of objective truth,” where debates about what is real become debates about who gets to decide what is real.

Yet some are showing us a different path.

Learning from Our Ancestors

The pattern is hauntingly familiar, but so is the solution. Just as our ancestors faced the choice between reckless adoption and wisdom-guided mastery of fire, we face the same choice with AI.

Some organizations and nations, like those early fire-adopting tribes, are building systematic approaches to AI. They’re establishing ethical frameworks, creating governance structures, and learning to direct this new power responsibly. Companies like Anthropic and OpenAI are implementing safety measures. The European Union is crafting comprehensive AI regulations. Some businesses are demanding transparency in AI decision-making and building human oversight into their systems.

Meanwhile, others rush forward without safeguards, treating AI as just another software tool rather than recognizing it as a fundamental force that can reshape human relationships, democracy, and society itself.

We’re in those critical early centuries with fire all over again. The individuals, organizations, and societies that will thrive aren’t necessarily the ones using the most advanced AI—they’re the ones learning to harness it with the same wisdom our ancestors developed around fire.

The Choice Before Us

The question isn’t whether AI will reshape human civilization. Fire settled that debate 400,000 years ago when the first human chose flame over darkness.

The question is whether we’ll be among those who learn to master this power responsibly—or among those whose communities burn while we’re still debating whether AI poses real risks.

Just as our ancestors developed fire departments, safety protocols, and cultural wisdom around fire’s dangers, we must develop AI governance, ethical frameworks, and shared responsibility for AI’s impact on human society. We must teach our children about AI’s dual nature just as we taught them “don’t play with fire.”

The choice before us is simple: We can rush headlong into AI’s power and repeat the burned villages of our past, or we can apply 400,000 years of hard-won wisdom about managing transformative technology.

We can demand the same safety standards, ethical frameworks, and cultural wisdom that our ancestors developed for fire. We can insist on transparency, human oversight, and democratic governance of AI systems that affect our lives.

We have just discovered fire.

What we do next will determine whether AI becomes humanity’s greatest tool or its most dangerous mistake. The tribes that learned to master fire didn’t just survive—they built civilization.

Now it’s our turn.

Ready to Master Fire Instead of Playing with It?

The pattern is clear: some will thrive with AI, others will get burned. The difference isn’t luck, it’s preparation.

If you’re ready to move beyond hoping AI works out to systematically mastering its power, you need the same thing our ancestors needed: proven frameworks, safety protocols, and guidance from those who’ve already learned the hard lessons.

The AI-First Marketing Playbook is your comprehensive guide to responsible AI mastery. Just as fire departments didn’t emerge overnight, we’ve spent years developing the systematic approaches that separate AI success stories from AI disasters.